ETL Best Practices for a Better Data Warehouse

Data warehousing is a critical component in organizations around the world. It helps them gain insights from their data and make decisions based on it. However, without proper ETL best practices, businesses may not be able to effectively use their data warehouses. That’s why understanding the fundamentals of ETL best practices for data warehousing is essential.

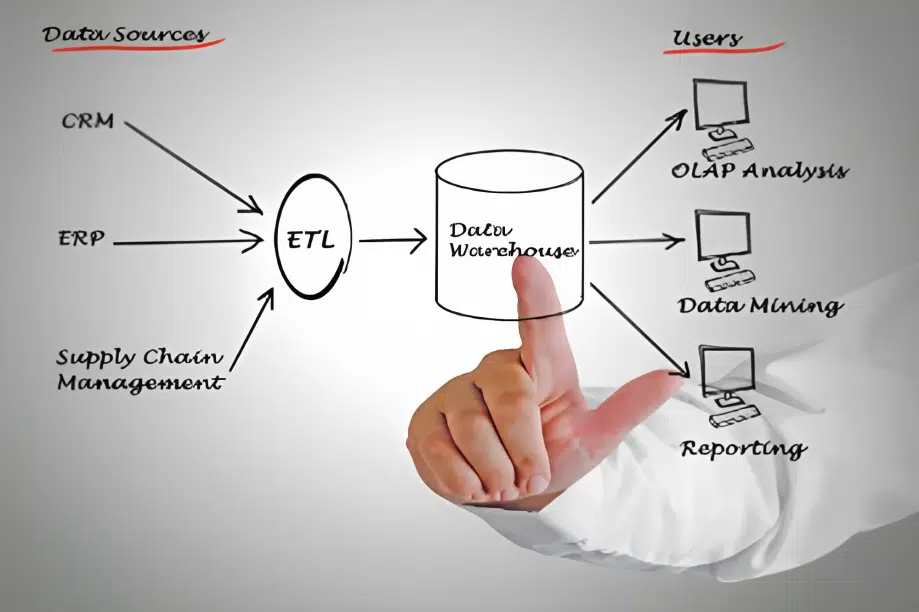

ETL stands for Extract Transform Load. It’s an important process that involves extracting data from its source, transforming it into something more useful, and then loading it into a destination database or system. This three-step process is essential for any organization that relies heavily on its data warehouses. By implementing good ETL best practices, organizations can ensure accuracy and completeness when dealing with large amounts of data.

With all the various components needed to manage a functional and successful ETL pipeline, it’s simple to become overwhelmed. Fortunately, by understanding the fundamentals and adhering to some basic rules, you can ensure that your information is accurately conveyed where necessary in no time! Discover critical best practices for effective data warehousing below.

What Is ETL?

Approximately 80% of data warehousing projects are devoted to ETL operations, but what exactly is it? To put it simply, ETL stands for Extract-Transform-Load. During this process, you transform raw facts and figures from one system or source into valuable insights in another. This integration helps organizations make well-informed decisions by providing them with accurate and up-to-date information.

ETL’s mission is to harness raw data from various sources and transform them into consumable intelligence for end users. During this process, large volumes of unstructured data are collected, cleaned up, and reorganized into a format suitable for analysis. This helps businesses make more informed decisions. This data provides complete insights about their customers, products, services, and other aspects of their operations.

High-Level Process Overview

Now that we’ve discussed what ETL is, let’s move on to the high-level process overview. This provides a general understanding of how data warehouses are built and maintained through different stages in an ETL process.

The first step involves designing a data architecture for the warehouse. This includes creating models to help define the structure of all incoming data sources. Data integration then follows, where disparate datasets are combined into one single source using various transformation techniques. The third stage consists of implementing data governance rules. This ensures that the quality and integrity of your data can be consistently monitored over time. Ultimately, to guarantee a successful project life cycle for all stakeholders involved, it is essential that there is complete documentation and communication during each phase.

With a broad perspective in mind, let us now explore precise strategies necessary to ensure sound data quality assurance as we progress on our pathway to constructing successful data warehouses.

Data Quality Assurance Strategies

Importantly, quality assurance is an integral aspect of the data warehousing ETL process. Without reliable assurance tactics and protocols in place, blunders can quickly become a major problem. To ensure the best possible data outcomes, it’s vital to verify that all collected information is complete, accurate, and appropriate for its intended purpose.

Crafting reliable data quality assurance strategies requires thoughtful consideration of numerous essential components. It is fundamental to establish distinct objectives for your data warehouse project in order to ensure successful results. This will help you identify which types of quality checks are necessary throughout the process.

Additionally, it’s important to establish consistent procedures for checking each step to ensure accuracy and completeness. Finally, having defined criteria for assessing data quality can help ensure consistency across all stages of development and implementation.

Using these approaches as a foundation, organizations can build robust methods of ensuring their data warehouses deliver clean, reliable information at every stage of production and delivery. By taking steps like these early on in the process, organizations can drastically reduce their risk of dealing with costly mistakes down the line due to inaccurate or incomplete datasets.

As such, investing time upfront into creating a comprehensive plan around data quality assurance could save significant amounts of money further down the road. Ultimately, this will result in greater success with any given project.

Transformations and Cleaning Techniques

Did you know that up to 80% of a data analyst’s job involves data cleaning and preparation? That’s why it’s important to understand the various transformations and cleaning techniques used in ETL best practices for data warehousing. Data transformation refers to any manipulation of raw, unstructured datasets into formats more suitable for analysis. Cleaning techniques include things like data cleansing, scrubbing, normalization, etc., which help ensure accuracy and consistency throughout the entire database.

Data cleansing involves removing erroneous or missing values from datasets. This process can also involve standardizing field names across multiple tables, so they’re uniform throughout the warehouse. Data scrubbing may take this one step further by reviewing all entries within a dataset and flagging potential problems such as incorrect date formats or duplicate records.

Finally, we have data normalization — an essential part of organizing information for easy access and retrieval. Normalization divides large tables into smaller ones with dependent relationships between them to reduce redundancy in the system.

Error Handling Methods

In processing ETL jobs, it is paramount to quickly detect errors in order to prevent them from accumulating further down the pipeline. To do this thoughtfully, you’ll need an efficient logging system as well as tracking capabilities. So, your team can address any issues immediately and accordingly.

Incorporating error detection into your ETL process is essential for effective data management. Anticipate where errors are likely to occur and equip yourself with strategies to prevent them from occurring in the first place – such as validating input fields and ensuring that the data meets predefined rules or standards. Doing so ensures greater accuracy of your overall results. Finally, having a plan for resolving errors once detected is paramount, whether that involves restarting failed processes or implementing manual fixes promptly and accurately.

With these approaches in place, you’ll have greater control over how errors are handled during your ETL processes. You’ll set yourself up for success before turning your attention toward security considerations.

Security Considerations

Data security is an important part of data warehousing, and it requires a well-thought-out plan. It’s not just about protecting the data from malicious actors. It’s also about safeguarding against accidental errors or system malfunctions that could compromise data integrity. To ensure your data warehouse is secure, consider implementing security protocols such as risk assessments and access control systems. Additionally, encryption methods can provide an extra layer of protection for sensitive information in your database.

In today’s digital landscape, companies must take proactive steps to protect their data assets. Security measures like encryption algorithms and authentication processes are essential tools businesses should use to keep their valuable information safe and secure. However, no matter how many safeguards you have in place, there will always be some degree of risk with any type of technology system or process — including data warehousing. That’s why it’s so critical to remain vigilant and periodically review existing procedures to make sure they are up-to-date with industry best practices and standards.

By taking these simple precautions, businesses can minimize their exposure to potential threats. They can also ensure their databases remain reliable sources of mission-critical information. With a sound security strategy in place, organizations can confidently move on to exploring more advanced concepts related to data modeling techniques.

Data Modeling Techniques

Data modeling involves designing a database structure that organizes business processes and their associated information into tables, columns, and relationships. It is an essential part of ETL best practices for data warehousing.

One popular approach in dimensional modeling is the star-schema technique. This consists of fact tables that contain aggregated measures. These include revenue or sales quantity, along with dimension tables that provide additional context, such as customer information or product category. Fact tables are often linked up with multiple dimensions forming a star shape, hence its name – the star schema. The advantage of this type of design is that queries can be easily written against large datasets using SQL joins without having to scan through all the rows within each table.

Understanding how to properly model data for your warehouse helps ensure optimal performance during ETL operations and analytics workloads alike. With these techniques under our belt, we’re now ready to explore how to optimize performance even further by exploring some specific approaches related to performance optimization.

Performance Optimization Approaches

I’m sure you’ve heard the phrase ‘time is money,’ and when it comes to ETL optimization, this couldn’t be more true. To ensure your data warehouse runs in its optimal performance state, there are a few techniques you can employ.

Performance tuning should begin with the data loading process, making sure that all queries have been optimized for maximal efficiency during loading. This includes restructuring query syntax or using advanced parameters such as filtering columns or sorting rows prior to loading.

Additionally, data caching can help reduce the time necessary for each query by storing frequently accessed results in memory, so they don’t need to be recomputed every single time. By focusing on these two areas of optimization, your system will run faster and smoother!

Automation of Processes

Having explored the performance optimization approaches in the previous section, it’s now time to explore the automation of processes. Automation is key when it comes to ETL best practices for data warehousing, as it can help streamline workflows and improve accuracy during data processing. Automation can be applied across a variety of tasks related to ETL operations, such as process automation, data automation, task automation, and workflow automation.

With automated processes, data transformation activities are faster and with fewer errors from manual input mistakes that often occur during manual operations. Plus, since these processes are repeatable, they are more reliable than non-automated solutions, which makes them ideal for large-scale projects involving multiple datasets. Data teams will also benefit from lower overhead costs associated with setting up automated operations compared to manual ones because the need for human intervention is minimized with automated tools or scripts.

In addition to being able to save time and money on operational costs, automating processes can provide businesses with greater visibility into their data by providing detailed reporting requirements on how information flows through various systems within an organization. These insights allow decision-makers to make better-informed decisions based on available data quickly and accurately.

Reporting Requirements

Coming to terms with data reporting is an essential part of any successful data warehousing project. Reporting requirements are the key to understanding how your business works and what it needs in order to reach its goals. The report design should be easy enough for anyone to understand, while still providing all necessary information. Automation also plays a major role when working on reports. Report analysis can help identify areas that need improvement and make sure you stay ahead of the competition.

It’s important to take auditing and compliance needs into consideration during this process as well. Understanding these demands will ensure the accuracy and trustworthiness of your reports so that your stakeholders can use them confidently. Making sure all reporting processes are efficient and up-to-date will keep your organization running smoothly and allow you to capitalize on opportunities quickly.

Auditing and Compliance Needs

Moving on from discussing reporting requirements, it is important to understand the need for auditing and compliance when it comes to data warehousing. To ensure accuracy in our reports and maintain standards of quality, we must adhere to a set of rules that regulate our practices. Auditing and compliance needs provide us with an extra layer of assurance by requiring checks and balances so that any errors within the system can be quickly identified and corrected.

When implementing ETL best practices, organizations should consider their legal obligations regarding regulations related to data handling. Having an established process for tracking changes helps ensure that any new or modified information complies with applicable laws and industry standards. This ensures data integrity while also reducing the risk associated with non-compliance. It’s essential to have proper tools in place to monitor all activities taking place during the ETL process, which will help identify any potential issues before they become too large.

Now, let us investigate the influence that connecting to external sources has on our ETL processes…

Integration with External Sources

Understanding the distinct types of data integration and how to combine them is essential for the harmonious completion of any data warehousing project. Data mapping, flows, and consolidation are among the most common methods to this end. With their use, you are one step closer to maximizing efficiency in your projects!

Data mapping is essential for creating a direct connection between source and target systems to transfer information with zero manual interference rapidly. This approach helps guarantee precision by eliminating mistakes caused by human input. Data flows are developed to facilitate the movement of data from one system to another. They also simultaneously protect against unauthorized access or manipulation. Finally, data consolidation allows organizations to combine multiple datasets into a single repository, allowing them to make informed decisions based on combined insights from all available resources.

With careful planning and execution, integrating external sources with an existing system has the potential to unlock powerful new insights and help drive organizational success. However, it’s essential not only to get these strategies right but also to plan ahead for scaling efforts as your business grows over time.

Scaling for Future Growth

Scaling for future growth is like preparing a power plant for peak usage – you must plan ahead and anticipate the changing needs of your data warehouse. With proper scalability planning, you can future-proof your data warehouse to handle unexpected surges in data growth.

Here are three key steps that will ensure your data warehouse remains agile and scalable:

- Measure existing data volume regularly

- Monitor server utilization closely

- Adapt quickly with flexible infrastructure solutions

Having a strategy in place to scale up or down depending on fluctuations in data volume helps keep costs low while allowing organizations to respond swiftly when faced with unexpected spikes in demand. Cloud-based solutions are becoming increasingly popular as they offer an efficient way to increase storage capacity without having to invest in additional hardware.

Cloud-Based Solutions

When it comes to data warehousing, cloud-based solutions are becoming increasingly popular. By working with a cloud-based provider, you can get access to all the features and functions of an on-premises solution without having to actually purchase or manage any hardware or software yourself. This is great for businesses that want fast deployment and scalability but don’t have time to invest in setting up their own IT infrastructure.

Plus, many cloud-based solutions come with built-in tools for data governance, security, and compliance. So, you can be sure your data is safe and secure no matter where you store it. And because these services are typically instant-deployment ready, you won’t have to wait weeks for everything to be set up before you’re able to start using them. With the wide range of convenience and flexibility cloud-based data warehousing offers, it’s time to discover its potential now!

The following step involves exploring deployment and maintenance approaches that will guarantee your system’s long-term stability.

Deployment and Maintenance Strategies

Ah, deployment and maintenance strategies. The bane of any data warehousing professional’s existence! Wouldn’t it be incredible if there was a simpler approach to guarantee that your ETL procedures are running consistently and effectively without the monotony of ceaselessly monitoring them? Sadly, this is still an unfulfilled desire.

Thankfully, there are solutions that allow you to keep your ETL processes polished and attend to other tasks. Cloud migration is a great option for swiftly deploying and managing data warehouses. By transferring your warehouse into the cloud, automated services such as scheduling regular backups and safety surveillance can be availed.

So, you no longer have to worry about it yourself! Additionally, with cloud-based solutions come greater scalability options – meaning you can easily adjust resources depending on need or budget constraints. All this translates into less manual labor overall and more peace of mind when it comes to keeping up with your ETL processes.

So if you’re looking for ways to minimize effort while keeping your data warehouses healthy and secure at the same time, then look no further than cloud migration for a reliable solution that will save both time and money over the long run.

Frequently Asked Questions

What is the best ETL tool to use?

Wondering what the best ETL tool is for data warehousing? Choosing the right technology can be a critical step in ensuring the accuracy, security, and efficiency of your data transformation process. To help you make an informed decision, here are some important considerations to keep in mind:

- Data Accuracy: Make sure that the chosen ETL tool provides accurate data processing with minimal errors or distortions.

- Cloud ETL: Look for cloud-based solutions to quickly process a large number of records without sacrificing quality or time.

- Security Considerations: Ensure that your ETL solution has robust security measures in place. This will protect sensitive information from unauthorized access and malicious attacks.

- Cost Effectiveness: Find an affordable yet reliable option that meets all your needs while staying within budget constraints.

With these features in mind, it’s clear that there isn’t one single answer as to which ETL tool is best for data warehousing – this will depend on your specific requirements like scalability, complexity, and cost-effectiveness. However, by taking into account factors such as performance, accuracy, and security, you can find the ideal choice for your organization’s particular needs.

How much time is necessary to complete an ETL process?

This is a frequent inquiry by data warehousing aficionados. The response depends on the intricacy of each unique project, as well as factors such as the number of sources and target databases. Generally speaking, it’s safe to anticipate that any ETL process will require its own period of time previously coming to fruition.

When deliberating how much time to dedicate to an ETL process, it’s important to contemplate all aspects of the project – including timeline expectations and system needs. For example, if a vast amount of data must be transferred quickly between several systems or locations, you’ll need more time than what is usually allotted for your ETL procedure. On the other hand, less complex projects can often be completed in a shorter period of time with fewer resources required. It’s up to you and your team to determine which approach works best for each specific situation.

In addition to understanding these nuances associated with completing an ETL process in terms of timing, there are also some general tips worth keeping in mind when attempting this type of task. Make sure you have clear goals in place prior to starting work on your project. This will ensure that everyone involved knows what they’re aiming towards.

Additionally, create detailed plans outlining what you need to accomplish during each step. This will reduce confusion while increasing efficiency across all departments throughout the entire ETL process time frame. Doing this will help ensure successful outcomes every single time!

What are the most important security considerations for ETL?

When it comes to ETL, security considerations are some of the most important factors for a successful process. When managing an ETL process, data security is absolutely paramount. All facets of information protection must be taken into account to ensure the safety and confidentiality of all the collected data throughout its entirety. Moreover, preserving data integrity during any transformation procedure is vital, as even a tiny mistake could bring about major repercussions in future operations.

Moreover, cloud security is vital when executing an ETL process. That’s because there could be confidential knowledge stored in the cloud that needs to remain secure. Security functions, such as encryption and access control, can help maintain your data from misuse or manipulation. Meanwhile, it’s also making sure it’s available when required. By prioritizing these extra procedures, you will construct a more secure atmosphere and reduce any potential risks related to using an ETL process.

By considering all aspects of data security and continually striving for improved protection measures, companies can make sure their valuable information remains safe throughout the entire ETL journey. This helps them safeguard against malicious attacks and adverse effects due to mishandling or misuse of sensitive data. Taking proactive steps toward creating a secure environment is crucial for protecting both company resources and customer privacy.

What is the best way to ensure data accuracy?

When it comes to ensuring data accuracy, there are a few best practices that you can follow. Data accuracy assurance is an important part of any ETL process. Therefore, you should not overlook it when developing a data warehouse.

One way to guarantee accuracy is through regular data quality assurance checks. This involves running consistency tests on all incoming or stored data sets to identify potential discrepancies before they affect other parts of the system. Additionally, implementing automated accuracy testing processes at different stages throughout the ETL lifecycle can help catch errors early on.

Furthermore, manual accuracy verification of source systems prior to loading into the warehouse helps confirm that only valid records are being transferred over. Lastly, performing routine data consistency checks after each transformation step ensures that results remain accurate and up-to-date. Here’s a summary list:

- Regular Data Quality Assurance Checks

- Automated Accuracy Testing Processes

- Manual Accuracy Verification

- Routine Data Consistency Checks

These steps can make sure you have clean and reliable data in your warehouse. This is essential for making business decisions with confidence. Understanding how these measures work together to create an efficient ETL pipeline makes it easier for teams to monitor their performance metrics and deliver high-quality results every time. Plus, having standardized procedures in place reduces tedious tasks while increasing overall productivity within the team!

Are there any specific challenges that come with using cloud-based ETL solutions?

Are you considering using cloud-based ETL solutions for your data warehouse? It’s a smart move, as the use of such technologies is steadily increasing. In fact, according to a recent analysis by Gartner Research, over 50% of organizations will be using some form of cloud-based ETL solution by 2022.

However, there are specific challenges that come with leveraging this type of technology. The most important ones include:

- Data Security: Security threats can easily arise when dealing with sensitive information in the cloud. Your business should address such risks through rigorous authentication processes and encryption protocols.

- Data Accuracy: When working with large amounts of data, make sure that all incoming information is accurate and up-to-date. This requires careful planning and the implementation of automated validation checks throughout the process.

- Performance & Scalability: Cloud-based ETL solutions need to be able to handle both current workloads as well as potential future growth in order to ensure uninterrupted performance across multiple locations or departments.

In addition to these key considerations, taking advantage of emerging advancements such as artificial intelligence (AI) and machine learning (ML) can also help ensure success in data warehousing projects involving cloud-based ETL solutions. By applying AI/ML techniques for predictive analytics, businesses can gain deeper insights into customer behavior. Meanwhile, it’s reducing the manual effort associated with traditional ETL tasks like cleansing and integration.

Therefore, if used effectively and securely, cloud-based ETL solutions can offer great benefits when it comes to managing data warehouses efficiently. With the right tools at hand, organizations have an opportunity to unlock powerful insights from their data while ensuring accuracy and scalability along the way.

Conclusion

In conclusion, the ETL process is an essential part of data warehousing and can help ensure that your data is accurate. As such, it’s important to be aware of best practices when performing this task. When selecting an ETL tool, consider how much time you need for completion and the security needs of your system.

Additionally, double-check accuracy in order to avoid costly errors down the road. Finally, if using cloud-based solutions, keep in mind any particular challenges they may present. In summary, with careful consideration and preparation, a successful ETL process can take us from darkness into light – illuminating our way forward!

If you’d like professional help with your ETL needs, Datrick can help. Schedule an intro call to discuss your business goals and expectations.